At Activera Consulting, we’ve recently connected and brainstormed with one of our ecosystem partners, Domino Data Lab, to uncover what we’re collectively seeing in the Energy Industry as it relates to Machine Learning Operations (MLOps) pain points.

Energy companies have a unique make-up of employees compared to other industries. The helicopter view is that there’s a high percentage of engineering talent, significant unstructured data, and a federated system of business units given the global nature of these multinational corporations. These facts can exacerbate the challenges technology and analytics teams encounter as part of their day-to-day of delivering value through data science.

Let’s take a look at each of these problems in more depth and then discuss how an effective MLOps Platform can work to resolve:

Citizen Development

Problem – Energy companies have a high percentage of engineering talent in their workforce. These engineers are trying to build and use machine learning applications, but they aren’t sure how to access compute or determine the proper requirements for executing jobs on the right infrastructure.

Solution – Engineering talent needs a product that can effectively abstract away the complexity of and provide access to CPU, GPU, and distributed compute (such as Spark, Ray, Dask etc.), which should be centralized under one System of Record platform for auditability, reproducibility, and reusability.

High-Performance Computing

Problem – Energy companies, especially in the Upstream sub-surface space, have substantial ‘heavy’ seismic data that makes Cloud optimization challenging. In fact, our collective experiences show there has been a recent trend of repatriating Cloud resources on-premises for these types of workloads given the cost-savings that can be realized from a local GPU cluster.

Solution – An MLOps Platform should support multi-cloud environments for connecting to any Cloud provider, any region (data sovereignty), and on-prem…bottom line, it shouldn’t matter where your data is or where you want to perform your computations. Additionally, your MLOps Platform should have the capability of showing you which compute is optimized for your workload and provide visibility into Cloud costs for effective processing & savings.

Federated Connectivity for Generative AI

Problem – Energy companies, given distributed nature, typically have federated business units and functions – there are so many data sources and it’s challenging to extract/vectorize that data to train industry-specific Large Language Models (LLMs) for generative AI benefit.

Solution – An MLOps Platform should have the versatility for model connection to any data source. When combining with something like Trino (enterprise-ready query engine), these data can be coalesced into a single connection point and accessed by your MLOps Platform without migration into a Data Lake, saving time and money.

These three challenges are the tops for Energy, but there are a number of additional pain points across all analytics teams. Some other problems that exist include lack of standardization across projects, ineffective model monitoring, little-to-no model reusability, and increased time-to-value given inability to put models into production quickly. A good MLOps Platform should solve these issues too.

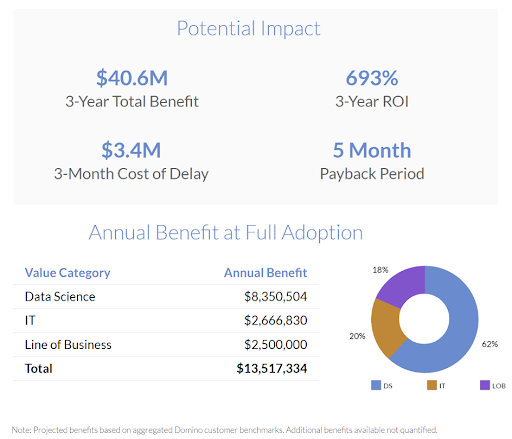

Another elephant-in-the-room challenge is the cost/time associated with implementing an MLOps Platform. Our research shows that the typical payback period falls in the 5-to-6 month range and an average 3-year ROI can be upwards of 600-to-700 percent.

That’s not to mention the more intangible benefits of employee morale and productivity.

If your analytics team has grown to double-digits, or you’re expecting that kind of growth in the coming months, it behooves you to start thinking about scale. Or, if you’re in an engineering-heavy industry with smart folks who like to tinker with Python and ML, it might be best to support them with a comprehensive solution or risk shadow systems being stood-up. In either case, an effective ML Ops Platform can be an answer to your woes.